In my last post I went over using a Service Principal account to access a Synapse SQL Pool with Power BI. I even showed how you could go across different tenants in my example. In this post instead of going against a SQL Server source I am going to switch to a Synapse Data Explorer (Kusto) Pool. Once again we will use the SQL Server ODBC driver just like we did in my last post.

For this post I will be using the same Service Principal and driver that we used in the previous post, so if you need any guidance with that or link to download the driver please reference that post here.

For the example that I will present here I will be do the following:

- Create a Synapse Data Explorer Pool and load data

- Grant the Service Principal account permissions to the Synapse Data Explorer Pool database (NOAA Storm Events data)

- Configure the ODBC System DSN to access the Synapse Data Explorer Pool

- Create a dataset and report using the ODBC source connection in Power BI

- Publish the report in a different tenant than the Synapse Workspace

- Configure a gateway to use in the Power BI service with the dataset

- Refresh the Power BI dataset and view the report in the service

As mentioned I will be reusing some of the items that were covered in the previous post, the only difference is that we will be going against a Synapse Data Explorer (Kusto) Pool. If you are already familiar with Azure Data Explorer (ADX) you can review the document here to see how these compare.

First step will be to create the Synapse Data Explorer Pool within the same Synapse Workspace we used in the previous post with the Synapse Dedicated Pool. This can be done in a few different places within the Azure Portal (Synapse Workspace Overview page or Analytics pools section) or in the Synapse Studio (Data or Manage Hub). In this example I am doing it in the Azure Portal and simply click the New Data Explorer Pool option in the Synapse Workspace Overview page and complete the required information.

I didn’t make any other setting changes and simply created the pool, this will take a few minutes to complete (approximately 15 minutes for me). In the meantime I have decided to use the NOAA Storm Events data which is a sample you will explore if you doing any of the Kusto Query tutorials on the Microsoft Learn site. I went ahead and downloaded all of the StormEvents_details files (73 of them) and extracted them to a folder on my laptop (1.31 GB total).

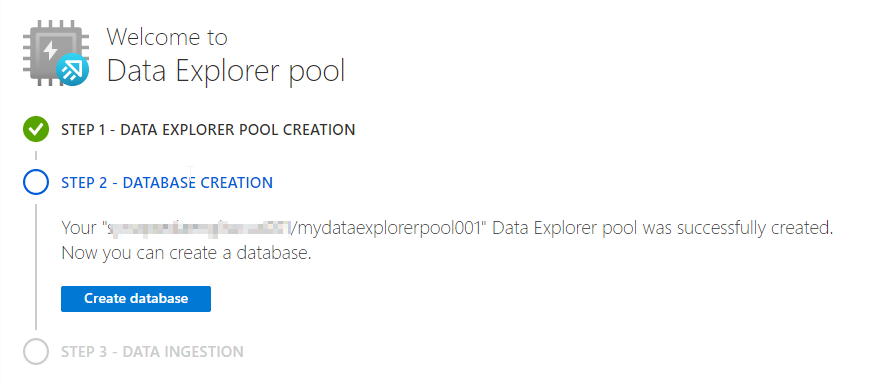

Once the pool is created on the Overview page you will be presented with a wizard that you can complete to create the initial database and load data.

Simply click the Create database button, enter a database name and set the day settings, and then click Create.

Creating the database will not take long and after you click the Refresh button on the Overview page you will be at Step 3 – Data Ingestion. Click on the Ingest new data button which will then launch Azure Data Explorer window with a connection to the newly created database. If we right-click on the database we can select the option to Ingest data which will launch us to another wizard to name the table, provide the source, set the schema, and then ingest (load) the data into the new table.

Provide a name for the new table on the Destination page and then on the next page in the wizard we will set the source.

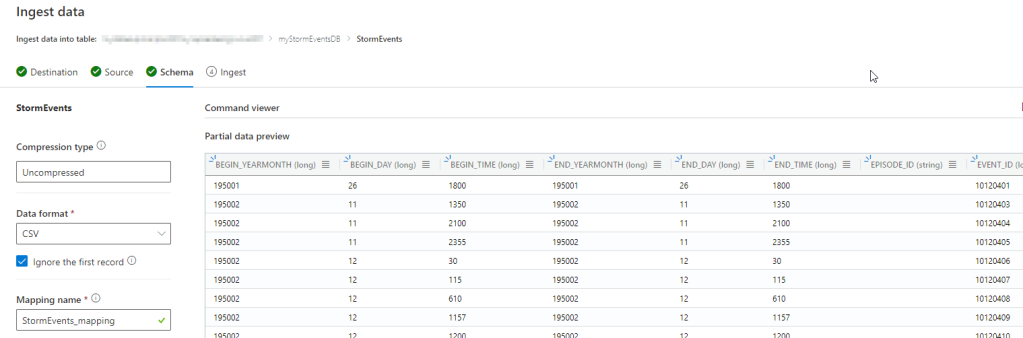

For the Source page you can simply drag and drop the 73 storm event csv files and it will start to upload them which will take a few minutes to complete (approximately 8 minutes for me). Next you can go to the Schema page and review what was done based on analyzing the data.

Go ahead and review the Schema page to how the data was mapped if you want and then click on the Start ingestion button.

Now the storm events data will be loaded into the table and this will take a few minutes to complete but should go relatively quickly and once this is done you will see a Data preview.

Now we are ready to move on to the second step and grant the Service Principal account access to the database. This can be done at the cluster level or database. In the Azure Portal within the Data Explorer Pool Permissions page you can add the Service Principal to the AllDatabasesViewer role.

Or in Azure Data Explorer in the Query tab with the connection to the new database you can use the following syntax –> .add database <databaseName> users (‘aadapp=<application id>;<tenant id>’) ‘<app name>’ as shown below

The third step is to create the ODBC System DSN which is pretty similar to how we did it for the Synapse Dedicated SQL Pool, but there is a slight twist which took me awhile to figure out and had to go into the registry to make a modification to the ODBC entry🤓

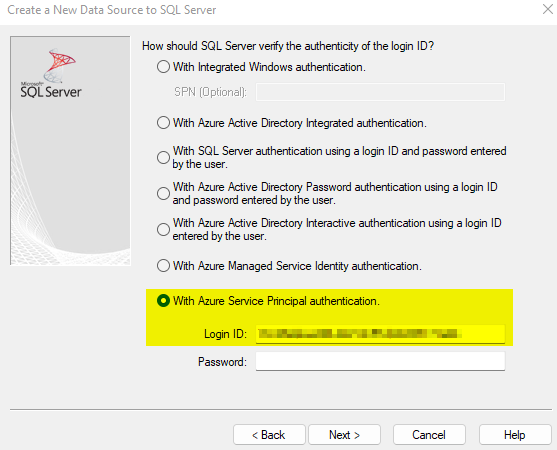

Just like we did in the previous post we will go into the ODBC Data Source Administrator (64-bit), click on the System DSN tab, and click Add. As before use the same ODBC Driver XX for SQL Server, click Finish, enter a name for the DSN, and then provide the Data Explorer Pool connection information which in this case will be in the format of <pool name>.<workspace name>.kusto.azuresynapse.net and you can get this from the Overview page of the Data Explorer Pool in the Azure Portal. It is the URI information and simply remove the “https://” information.

On the next page just like before we will select the option for Azure Service Principal authentication, provide the application id for the Login ID, and then click Next. There is no need to put the Secret Value yet for the Password because we need to simply create the entry first in the registry to modify it and then we can come back in with the password to do an official test.

On the next page you can go ahead and set the default database setting and then click Next.

On the next page you can click Finish and then OK. Now if you would have provided the Secret Value and then tried to test the data source connection you would have been provided this message about “No tenant identifying information found in either the request or implied by any provided credentials” as shown below.

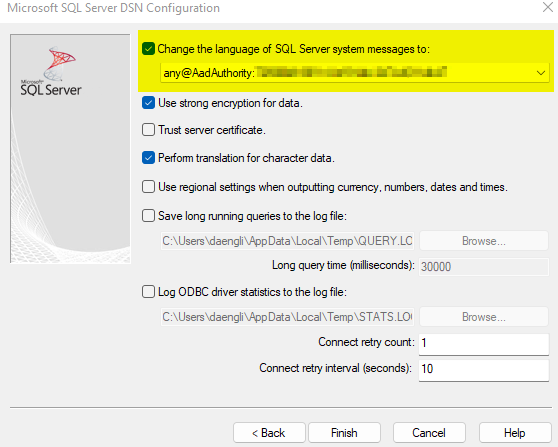

This is what we are going to fix in the registry and it took me awhile to track down the solution and thanks to this article here on MS-TDS clients and Kusto I determined how to include the tenant information into the “Language” field in the ODBC data source🤠 Once you have created the System DSN you need to use the Registry Editor to add a new String Value to the ODBC entry called “Language” with the “any@AadAuthority:<tenant id>” format as shown in the link above.

Now if you switch back to the ODBC Data Source Administrator you can configure the System DSN that was created to include the Secret Value for the Password and then as you click through to the final page you will see the language information included now based on what you entered into the registry.

And when you go to Test Data Source now you should see (fingers crossed) a “TESTS COMPLETED SUCCCESSFULLY!” message.

The fourth step now is the easy part, just like we did in the last post, open Power BI Desktop, use the ODBC connection, and connect to our Kusto database to create our dataset.

There is just one table so this is pretty easy. We can clean up the column names to make them more readable, add measures, hide columns that are not needed, etc. and then build out a report. As you can see below in the report we have over 1.7M records loaded and the size of the Power BI file once all of the data is loaded is 188 MB which is much less than the 1.31 GB of CSV files👍

For the fifth step now we simply need to publish the report to the Power BI service which is straight forward and just like the last post and so is the sixth step where we configure the gateway connection once published to include the new DSN data source. Just remember to replicate the ODBC configuration that we did above on the gateway machine that is being used by the Power BI service.

Just make sure to follow the same steps as the last post and reference that if you have any questions.

For the final step you can now go ahead and test everything out by running the dataset refresh and then viewing and interacting with the report.

And if we interact with the report everything should be good and just remember once again this demo like the last post is going across tenants using the Service Principal account.

That completes this post covering the topic of being able to use a Service Principal account to connect to Synapse Data Explorer Pool for a Power BI report.

Let me know what you think and thanks for checking out this post, I hope you found this useful:)

Subscribe to Blog feed

Subscribe to Blog feed